Experiment and optimise

13 minutes

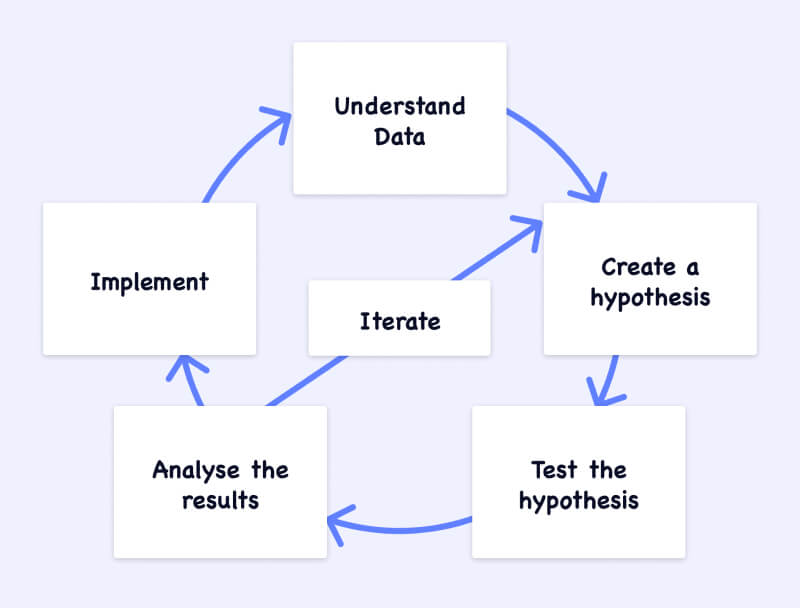

Let's take a look at experimentation and optimisation - this is a powerful process and one you will need when progressing in your career!

Experimentation allows us to safely and confidently roll out new features and UI changes by running tests on a percentage of your user base. By running experiments in this iterative process, we can create incremental changes to our products and optimise our results.

This cycle is similar to the Lean UX cycle of Think, Make, Check.

Start with data

All experiments have to start with a data, which can be either qualitative or quantitative. Starting with data allows us to make informed hypotheses which we can have more confidence in. For example, this could be a quantitative data point from your analytics that says users are twice as likely to convert when two or more filters are applied to a set of search results, or some common themes in user feedback which show you what features to focus on.

Create your hypotheses

Now you have a strong data point to start from, you can create a hypothesis. A hypothesis is a statement made on the basis of limited evidence as a starting point for further investigation which follows a general format;

"We believe that if we do X, Y will happen.

We will know this to be true if we see an increase in Z metric"

If you work with personas, some people find it useful to also add information about which persona you are designing for.

Based off of our previously stated data point around the use of filters, we could create the following hypothesis; “We believe that by highlighting popular filters, we will make it easier for users to find the products they are looking for. We will know this to be true if we see an uplift in conversion & filter use”.

Test the Hypothesis

Now we can design and create a test around this hypothesis. Popular methods for testing are A/B tests or Multivariate testing (MVT);

A/B Testing is the most popular form of experimentation. In this type of test, we create two or more variations of the same page but we make a change to just one element. We could change the copy on a button, make small UI changes, or even test new features. Generally, a variant will be tested against the 'base' design, which is what would be live already.

MVT, or Multivariate Testing, is similar to an A/B Testing, except that multiple features are changed at the same time. Portions of your user base see one of all possible combinations in order to find the best possible combination of those changes. MVTs require a lot more traffic than A/B Tests in order to gain statistical significance.

Statistical significance is the likelihood that the change in metrics between a variation and the baseline is not due to random chance. It's standard best practice to work to a 95% statistical significance which means there is only a 5% risk that a change in metrics would be due to random chance.

In order to achieve statistical significance when testing, you will need to reach a certain amount of users. Optimizely have a handy sample size calculator which can help you understand how much traffic you will need to reach based on baseline metrics and your minimum detectable effect.

Analyse the results

Once you have given the test enough time to reach significance, it's time to analyse the results. If you have proven the hypothesis was correct; amazing! Remember to take time to celebrate your win with your team mates before moving to the next step! You have just made an incremental, scientifically backed change to your product which is 95% guarenteed to have the same impact on your metrics when put in front of 100% of your traffic.

If not, why not? Use your new learnings to go back and inform and adjust the hypothesis, then iterate through the steps again.

Implement the winner

If we have enough evidence to suggest that an idea would have value at scale. Once implemented, we continue to measure to gain new data.

This new data takes us back to the first step of the process; Start with data. Now that we have new data, we can create new hypotheses and then iterate the cycle over and over again, implementing winning tests and building incremental results.